multichannel instrument and composition

as we step into auditive point of view then we can perceive world as pretty rich multichannel sound system. from monophonic through stereo and dolby systems we can see a propagation of idea to adopt this richness into sound reproduction technology. there are some rare examples like IEM-cube which offers 24 speakers for listening and also we can mention artists like edgard varèse or francisco lópez who created multichannel compositions to overcome some ordinary listening experience.this project is about developing a first version of modular and adaptable multichannel instrument which can provide as much channels as needed and can operate both as a stand alone sound installation and as a live instrument. the whole instrument is supposed to be open-source project (so it can be modulated and adopted by other artists/programmers) and it is also supposed to be lowcost project (which does not necessarily implies that its sound will be lo-fi).

technical description

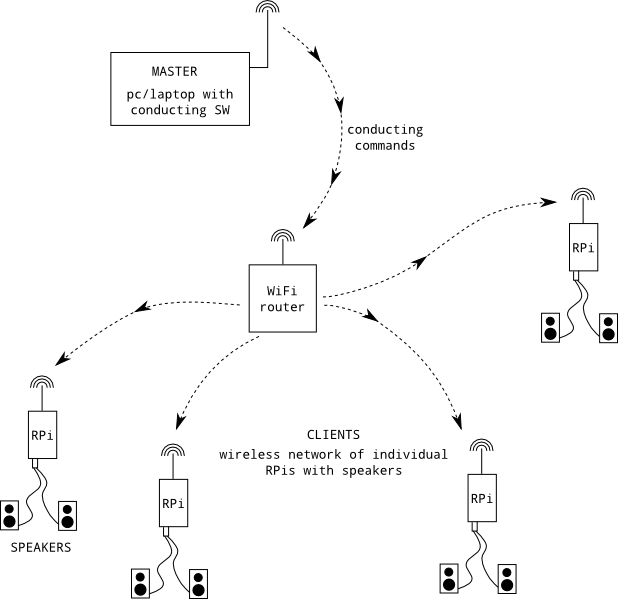

the whole instrument is made of a variable amount of raspberry pi2 microcomputers (client) with hifiberry shield. total amount of channels equals to amount of used clients times two (now i have only 8 clients so it has 16 channels). pi2 offers better computation power than earlier versions. hifiberry provides better quality of reproduced sound than integrated soundcard. all clients are connected through wifi router. using wireless technology enables free and adaptable distribution of speakers into space/concert hall so it is possible to create a site specific sound installations and performances. contrary: wifi limits a little its possibilities in realtime performance (network latency). the end of sound chain consists of small max98306 amplifier and small speakers.all clients run linux with these applications: zynaddsubfx, fluidsynth and original sound software programmed in pure data (granular sampler, sample player, synth and oscillator bank). this configuration can be changed or enriched with adding some other sound software and effect chains. only limit is computational power of used client.

clients are conducted by one master computer which runs original software also programmed in pure data. it provides some basic interface so every client can be conducted individually or in groups. interface is modular so it is dynamically generated according to number of used speakers.

simplified graphical representation of described multichannel instrument looks like this:

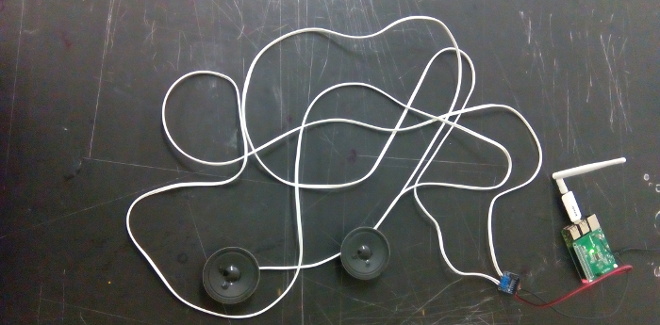

individual client (raspberry pi2 + hifiberry shield + max98306 with speakers) goes here:

shortened recording of standalone multichannel composition is here:

examples of user interface:

and another short examples from multichannel composition:

limitations

as i was developing and testing all the system i realized, that most limiting aspect of it is wirelless connection. wifi is not very reliable in delivering packets in desired time. i was able to reach less than 10ms delay between master computer and clients but sometimes (especially if area is crowded by many other wifi AP) it dropped to more than 20ms delay which is unusable for exact musical composition. if conducting command for triggering sample/note is delivered with delay bigger than 10ms it can ruin all exact composition and result in "reverb" effect.to avoid this problem i decided to use ntpd protocol to synchronize time in all the clients with master computer. here the results were more than satisfying - it is possible to synchronize all the clients with deviation in order of milliseconds and than trigger prepared composition tracks in certain time very exactly. it is possible to use this version of system as stand alone multichannel instrument which is playing prepared composition but still it lacks functionality as a live instrument because sometimes music events (playing samples and notes) are delivered from master to clients with delay so it is not really possible to prepare e.g. rhytmical exact live improvisation.

further development - ver0.2

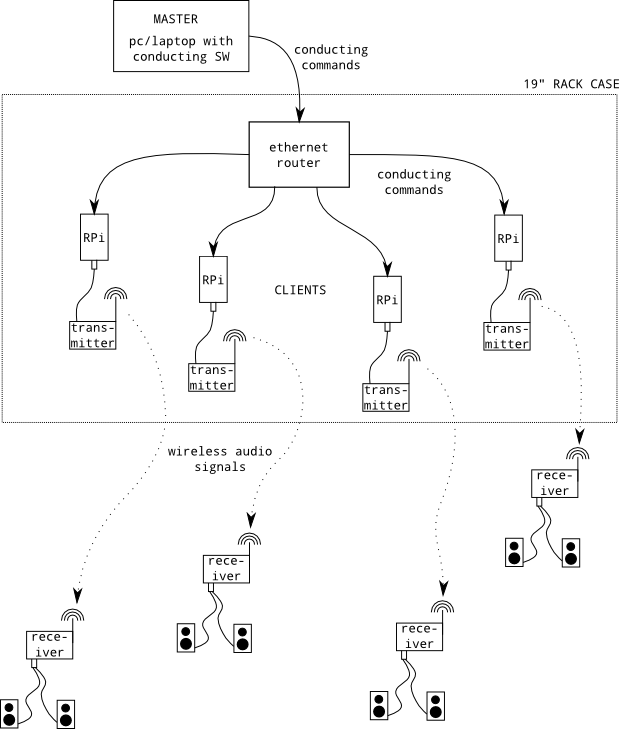

i guess mentioned limitations are solvable by removing the weakest part which is wifi connection and replace it with ethernet connection which is both faster and more reliable. wireless aspect of whole system can be kept by using fm transmitters which can deliver audio signals from clients to speakers. new version of system can be schematically described like this:

for newer version of system it could be also possible to use more powerful version of raspberry pi 3 which can provide more computational potential so it can run more complex synthesizers and sound applications. i would like to program special synth in functional DSP language FAUST which can produce very optimalized code and save some CPU for something else. it will be also necessary to extend, reprogram, clean up and optimize pure data code from ver0.1 because now it can provide only very basic functionality in conducting whole multichannel system. finally all the codes should be published as an open source so anyone else can use and modify them for own purposes.

more recent tracks

requiem for czech audio-visual scene - released in his voice magazineX - track for exhibition of adela součková

erroneous menfolk - noinput track (with matouš hejl), signals from arkhaim

old tracks

sofapurka